|

|

|

|

|

|

Speech recognition for computers |

|

Fast and highly accurate spontaneous speech recognition |

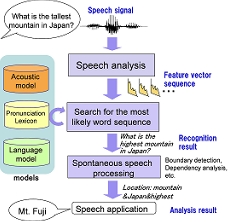

Automatic speech recognition (ASR) technology endeavors to endow computers with a primary human communication channel, namely speech. Given an audio signal, ASR systems identify segments that contain a speech signal. For each speech segment, a sequence of information-rich feature vectors is extracted and matched to the most likely word sequence given a set of previously-trained speech models reflecting the salient features of a languages’ phonemes. Although they may correspond to the same spoken word content, real world speech signals vary greatly depending on the speaker and on the acoustic environment, especially for spontaneous speech in natural human conversation, where speech patterns are highly diverse, ambiguous and incomplete. Human beings can absorb this variation remarkably well and can fill in missing components instantly. Our research on speech recognition technology aims to bring human faculties for high performance, high speed and robust speech recognition to computers.

|

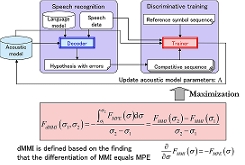

*1 differenced Maximum Mutual Information *2 Round Robin Duel Discrimination *3 Weighted Finite-State Transducer |

[ Reference ] |

[1] T. Hori, C. Hori, Y. Minami, and A. Nakamura, "Efficient WFST-based

one-pass decoding with on-the-fly hypothesis rescoring in extremely

large vocabulary continuous speech recognition," IEEE Transactions on

Audio, Speech and Language Processing, Vol. 15, pp. 1352―1365, 2007.

|

[2] M. Delcroix, T. Nakatani, and S. Watanabe, "Static and dynamic variance

compensation for recognition of reverberant speech with dereverberation

preprocessing," IEEE Transactions on Audio, Speech, and Language

Processing, vol. 17, no. 2, pp. 324-334, 2009.

|

[3] E. McDermott, S. Watanabe, and A. Nakamura, "Discriminative training

based on an integrated view of MPE and MMI in margin and error space,"

Proc. ICASSP'10, pp. 4894-4897, 2010.

|

[4] T. Hori, S. Araki, T. Yoshioka, M. Fujimoto, S. Watanabe, T. Oba, A.

Ogawa, K. Otsuka, D. Mikami, K. Kinoshita, T. Nakatani, A. Nakamura, J.

Yamato, "Low-latency real-time meeting recognition and understanding using

distant microphones and omni-directional camera," IEEE Transactions on

Audio, Speech, and Language Processing, Vol. 20, No. 2, pp. 499―513, 2012.

|

[5] T. Oba, T. Hori, A. Nakamura, A. Ito, "Round-Robin Duel Discriminative

Language Models," IEEE Transactions on Audio, Speech and Language

Processing, Vol. 20, No. 4, pp. 1244-1255, May 2012.

|

[6] Y. Kubo, S. Watanabe, T. Hori, A. Nakamura, "Structural Classification

Methods based on Weighted Finite-State Transducers for Automatic Speech

Recognition," IEEE Transactions on Audio, Speech, and Language

Processing, Vol. 20, Issue 8, pp. 2240―2251, 2012.

|

[7] M. Delcroix, K. Kinoshita, T. Nakatani, S. Araki, A. Ogawa, T. Hori,

S. Watanabe, M. Fujimoto, T. Yoshioka, T. Oba, Y. Kubo, M. Souden, S.-J.

Hahm, and A. Nakamura, "Speech recognition in living rooms:

Integrated speech enhancement and recognition system based on spatial,

spectral & temporal modeling of sounds," Computer

Speech and Language, Elsevier, vol. 27, no. 3, pp. 851-873, 2013.

|

[8] Y. Kubo, T. Hori, A. Nakamura, "Large Vocabulary Continuous Speech

Recognition Based on WFST Structured Classifiers and Deep Bottleneck

Features," Proc. ICASSP, 2013.

|

[9] M. Delcroix, S. Watanabe, T. Nakatani, and A. Nakamura,

"Cluster-based dynamic variance adaptation for interconnecting speech

enhancement pre-processor and speech recognizer," Computer Speech and

Language, Elsevier, vol. 27, no. 1, pp. 350-368, 2013.

|

[10] T. Hori, Y. Kubo, A. Nakamura, "Real-time one-pass decoding with

recurrent neural network language model for speech recognition," in

Proc. ICASSP, 2014.

|

|

|

|

|

|

Copyright (C) NTT Communication Science Laboratories |