|

|

|

|

|

|

Listening to human speech in noisy reverberant environments |

|

Audio communication scene analysis by computer |

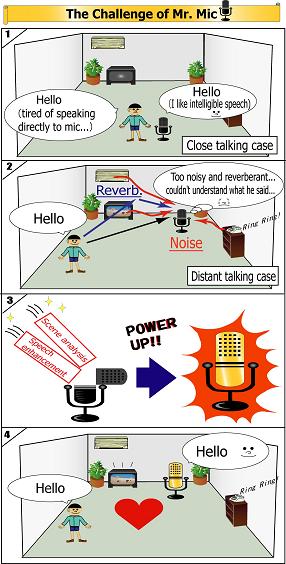

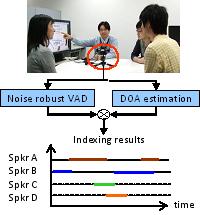

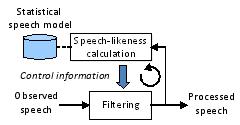

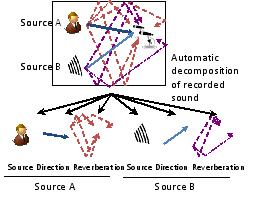

Speech is one of the most natural and useful media for human communication. If a computer could appropriately handle speech signals in our daily lives, it could provide us with more convenient and comfortable speech services. However, when a speech signal is captured by distant microphones, background noise and reverberation contaminate the original signal and severely degrade the performance of existing speech applications. To overcome such limitations, we are investigating methodologies for automatically detecting individual speech signals in captured signals (scene analysis) and recovering their original quality (speech enhancement). Our research goal is thus to establish techniques that extract such information as "who spoke when" from human communication scenes and enable various speech applications to work appropriately in the real world.

|

[ Reference ] |

[1] T. Yoshioka, T. Nakatani, M. Miyoshi, and H. G. Okuno,

"Blind separation and dereverberation of speech mixtures by joint

optimization," IEEE Transactions on Audio, Speech, and Language Processing,

vol. 19, no. 1, pp. 69-84, Jan. 2011.

|

[2] K. Kinoshita, M. Souden, M. Delcroix and T. Nakatani,

"Single channel dereverberation using example-based speech enhancement with

uncertainty decoding technique,'' Proc. of Interspeech2011 , pp.197-200, 2011.

|

[3] T. Nakatani, S. Araki, T. Yoshioka, M. Delcroix, and M. Fujimoto,

"Dominance Based Integration of Spatial and Spectral Features for Speech

Enhancement," IEEE Trans. ASLP., vol. 21, No. 12, pp.2516-2531, Dec. 2013.

|

[4] M. Souden, K. Kinoshita, M. Delcroix, and T. Nakatani,

"Location Feature Integration for Clustering-Based Speech Separation in

Distributed Microphone Arrays," IEEE Trans. ASLP, Vol. 22, no. 2, pp.

354-367, 2014.

|

[5] N. Ito, S. Araki, and T. Nakatani, "Probabilistic Integration of Diffuse

Noise Suppression and Dereverberation, " Proc. ICASSP, 2014.

|

[6] M. Fujimoto, Y. Kubo, and T. Nakatani, "Unsupervised non-parametric

Bayesian modeling of non-stationary noise for model-based noise

suppression," in Proc. ICASSP, 2014.

|

[7] A. Ogawa, K. Kinoshita, T. Hori, T. Nakatani and A. Nakamura,

"Fast segment search for corpus-based speech enhancement based on speech

recognition technology," in Proc. ICASSP, 2014.

|

[8] K. Kinoshita, M. Delcroix, T. Yoshioka, T. Nakatani, E. Habets, R.

Haeb-Umbach, V. Leutnant, A. Sehr, W. Kellermann, R. Maas, S. Gannot, B.

Raj, B, "The REVERB challenge: a common evaluation

framework for dereverberation and recognition of reverberant speech,"

in Proc. WASPAA, 2013.

|

[9] T. Yoshioka, A. Sehr, M. Delcroix, K. Kinoshita, R. Maas, T. Nakatani,

and W. Kellermann, "Making machines understand us in reverberant rooms:

robustness against reverberation for automatic speech recognition," IEEE

Signal Processing Magazine, vol. 29, no. 6, pp. 114-126, Nov. 2012.

|

|

|

|

|

|

Copyright (C) NTT Communication Science Laboratories |