Papers

- Hirokazu Kameoka, Takuhiro Kaneko, Kou Tanaka, Nobukatsu Hojo, "StarGAN-VC: Non-parallel many-to-many voice conversion with star generative adversarial networks," arXiv:1806.02169 [cs.SD], Jun. 2018.

StarGAN-VC

StarGAN-VC is a method for non-parallel many-to-many voice conversion (VC) using a variant of generative adversarial networks (GANs) called StarGAN [1]. Our method is particularly noteworthy in that it (1) requires neither parallel utterances, transcriptions, nor time alignment procedures for speech generator training, (2) simultaneously learns many-to-many mappings across different attribute domains using a single generator network, (3) is able to generate signals of converted speech quickly enough to allow for real-time implementations and (4) requires only several minutes of training examples to generate reasonably realistic-sounding speech.

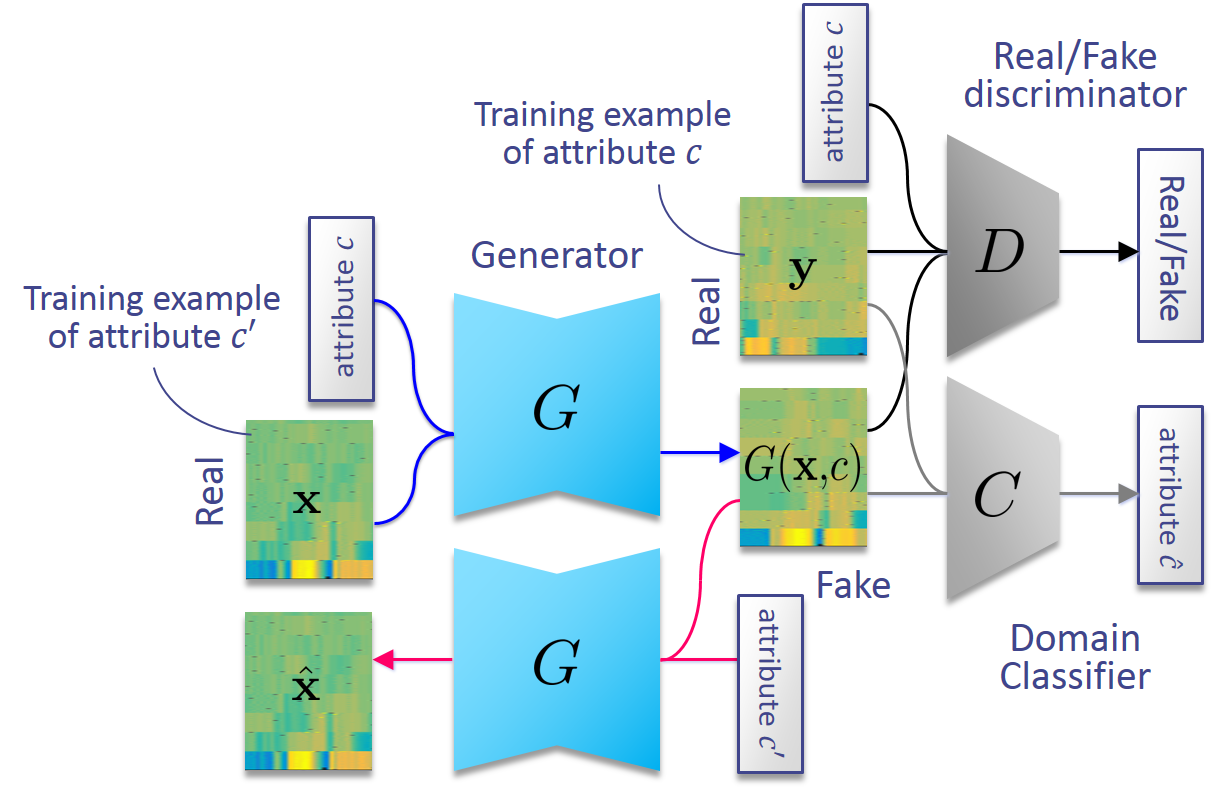

We previously proposed a non-parallel VC method using a GAN variant called the cycle-consistent GAN (CycleGAN) [2], which was originally proposed as a method for translating images using unpaired training examples. While CycleGAN-VC only learns one-to-one mappings, StarGAN-VC is capable of simultaneously learning many-to-many mappings using a single encoder-decoder type generator network G where the attributes of the generator outputs are controlled by an auxiliary input c. Here, c denotes an attribute label, represented as a concatenation of one-hot vectors, each of which is filled with 1 at the index of a class in a certain category and with 0 everywhere else. For example, if we consider speaker identities as the only attribute category, c will be represented as a single one-hot vector, where each element is associated with a different speaker. Now, we would want to make G(x,c) as realistic as real speech features and properly belong to attribute c. To these ends, we introduce a real/fake discriminator D, whose role is to classify whether an input is real or fake, and a domain classifier C, whose role is to predict to which classes an input belongs. By using D and C, G can be trained in such a way that G(x,c) is misclassified as a real speech feature sequence by D and correctly classified as belonging to attribute c by C. We also use a cycle consistency loss for the generator training to ensure that the mappings between each pair of attribute domains will preserve linguistic information.

Links to related web sites

Please also refer to the following web sites for comparison.

- VAEGAN-VC [4] (External link)

- CycleGAN-VC [2] (Our work)

- ACVAE-VC (Our work)

- Links to my other work

Audio examples

Here, we demonstrate audio examples of StarGAN-VC tested on a non-parallel many-to-many speaker identity conversion task. We selected speech of two female speakers, 'SF1' and 'SF2', and two male speakers, 'SM1' and 'SM2', from the Voice Conversion Challenge (VCC) 2018 dataset [3] for training and evaluation. Thus, there were twelve different combinations of source and target speakers in total. The audio files for each speaker were manually segmented into 116 short sentences (about 7 minutes) where 81 and 35 sentences (about 5 and 2 minutes) were provided as training and evaluation sets, respectively. Subjective evaluation experiments revealed that StarGAN-VC obtained higher sound quality and speaker similarity than a state-ofthe-art method based on variational autoencoding GANs [4]. Audio examples of the VAEGAN approach are demonstrated at the authors' website [5].

SM1 (Male) → SF1 (Female)

SF2 (Female) → SM2 (Male)

SM2 (Male) → SM1 (Male)

SF1 (Female) → SF2 (Female)

References

[1] Y. Choi, M. Choi, M. Kim, J.-W. Ha, S. Kim, and J. Choo, "StarGAN: Unified generative adversarial networks for multidomain image-to-image translation," arXiv:1711.09020 [cs.CV], Nov. 2017.

[2] T. Kaneko and H. Kameoka, "Parallel-data-free voice conversion using cycle-consistent adversarial networks," arXiv:1711.11293 [stat.ML], Nov. 2017.

[3] J. Lorenzo-Trueba, J. Yamagishi, T. Toda, D. Saito, F. Villavicencio, T. Kinnunen, and Z. Ling, "The voice conversion challenge 2018: Promoting development of parallel and nonparallel methods," arXiv:1804.04262 [eess.AS], Apr. 2018.

[4] C.-C. Hsu, H.-T. Hwang, Y.-C. Wu, Y. Tsao, and H.-M. Wang, “Voice conversion from unaligned corpora using variational autoencoding Wasserstein generative adversarial networks,” in Proc. The Annual Conference of the International Speech Communication Association (Interspeech), 2017, pp. 3364–3368.