Media and Communication

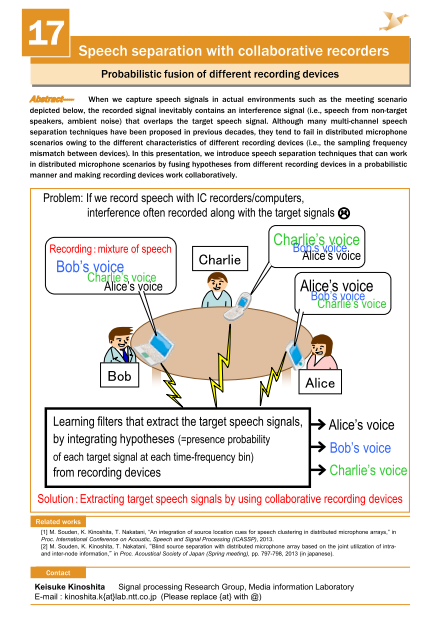

Speech separation with collaborative recorders

- Probabilistic fusion of different recording devices -

Abstract

When we capture speech signals in actual environments such as the meeting scenario depicted below, the recorded signal inevitably contains an interference signal (i.e., speech from non-target speakers, ambient noise) that overlaps the target speech signal. Although many multi-channel speech separation techniques have been proposed in previous decades, they tend to fail in distributed microphone scenarios owing to the different characteristics of different recording devices (i.e., the sampling frequency mismatch between devices). In this presentation, we introduce speech separation techniques that can work in distributed microphone scenarios by fusing hypotheses from different recording devices in a probabilistic manner and making recording devices work collaboratively.

Reference

- M. Souden, K. Kinoshita, T. Nakatani, "An integration of source location cues for speech clustering in distributed microphone arrays," in Proc. International Conference on Acoustic, Speech and Signal Processing (ICASSP), (to appear), 2013.

- M. Souden, K. Kinoshita, M. Delcroix, T. Nakatani, "Distributed microphone array processing for speech source separation with classifier fusion," in Proc. IEEE International Workshop on Machine Learning for Signal Processing (MLSP), pp. 1-6, 2012.

Presentor

Marc Delcroix

Media Information Laboratory

Media Information Laboratory

Nobutaka Ito

Media Information Laboratory

Media Information Laboratory

Masakiyo Fujimoto

Media Information Laboratory

Media Information Laboratory

Takuya Yoshioka

Media Information Laboratory

Media Information Laboratory