Media and Communication

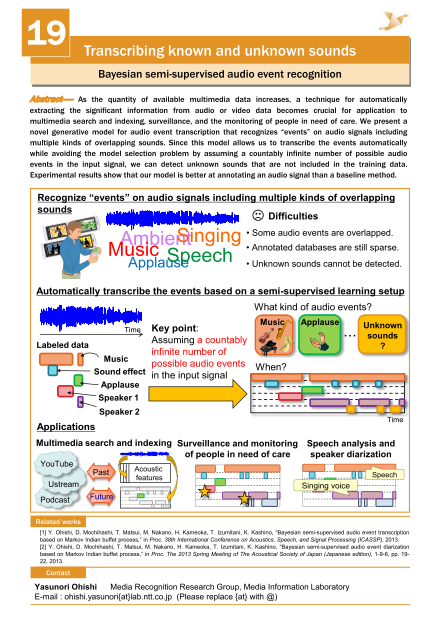

Transcribing known and unknown sounds

- Bayesian semi-supervised audio event recognition -

Abstract

As the quantity of available multimedia data increases, a technique for automatically extracting the significant information from audio or video data becomes crucial for application to multimedia search and indexing, surveillance, and the monitoring of people in need of care. We present a novel generative model for audio event transcription that recognizes “events” on audio signals including multiple kinds of overlapping sounds. Since this model allows us to transcribe the events automatically while avoiding the model selection problem by assuming a countably infinite number of possible audio events in the input signal, we can detect unknown sounds that are not included in the training data. Experimental results show that our model is better at annotating an audio signal than a baseline method.

Reference

- Y, Ohishi, D. Mochihashi, T. Matsui, M. Nakano, H. Kameoka, T. Izumitani, K. Kashino, “Bayesian semi-supervised audio event transcription based on Markov Indian buffet process,” in Proc. 38th International Conference on Acoustics, Speech, and Signal Processing (ICASSP) 2013.

- Y, Ohishi, D. Mochihashi, T. Matsui, M. Nakano, H. Kameoka, T. Izumitani, K. Kashino, “Bayesian Semi-supervised Audio Event Diarization based on Markov Indian Buffet Process,” in Proc. The 2013 Spring Meeting of The Acoustical Society of Japan (Japanese edition), 1-9-6, pp. 19-22, 2013.

Presentor

Masahiro Nakano

Media Information Laboratory

Media Information Laboratory

Kunio Kashino

Media Information Laboratory

Media Information Laboratory