Papers

- Hirokazu Kameoka, Takuhiro Kaneko, Shogo Seki, Kou Tanaka, "CAUSE: Crossmodal action unit sequence estimation from speech with application to facial animation synthesis," in Proc. The 23rd Annual Conference of the International Speech Communication Association (Interspeech 2022), pp. 506-510, Sep. 2022.

CAUSE

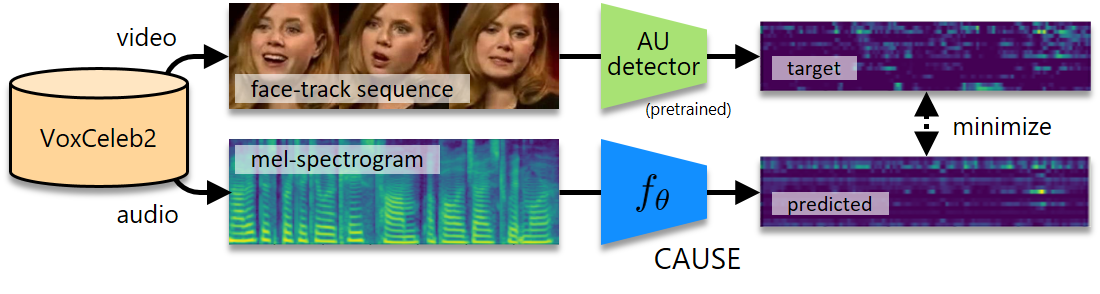

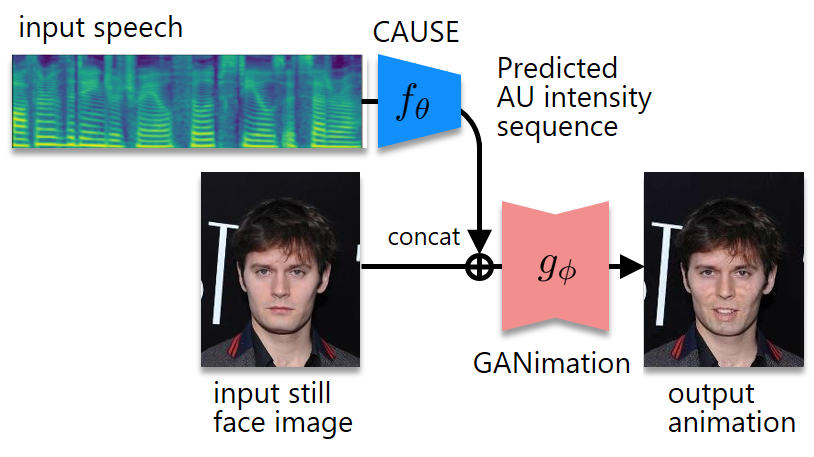

CAUSE, which stands for "crossmodal action unit sequence estimation/estimator", is a method or a model for estimating a sequence of facial action units (AUs) [1] solely from speech. The goal is to train a neural network model (called the CAUSE network) that takes the mel-spectrogram of speech and produces a sequence of AU intensities by using a large-scale audio-visual dataset consisting of many speaking face-tracks. The training scheme is inspired by the crossmodal emotion transfer (CME) [2], which uses the teacher-student learning framework to train a speech emotion recognizer on a dataset of speaking face-tracks. Below, we demostrate examples of facial animation synthesis from speech using CAUSE combined with "GANimation" [3], an image-to-image translation method based on generative adversarial networks (GANs) [4][5].

Action Unit (AU)

AUs, introduced in the facial action coding system (FACS) proposed in the late 1970s, are facial muscular activity units associated with the contraction or relaxation of specific facial muscles, and can describe nearly any anatomically possible facial expression.

Crossmodal emotion transfer (CME)

CME is a method for predicting a sequence of the distributions over eight emotional states (neutral, happiness, surprise, sadness, anger, disgust, fear, and contempt) from speech. The idea is to first train a facial expression recognizer ("teacher") using labelled face images, and then train a speech emotion recognizer ("student") using the audio part of unlabelled videos of human speech in the wild so that its predictions match those of the teacher on the face-tracks of the corresponding segments.

GANimation

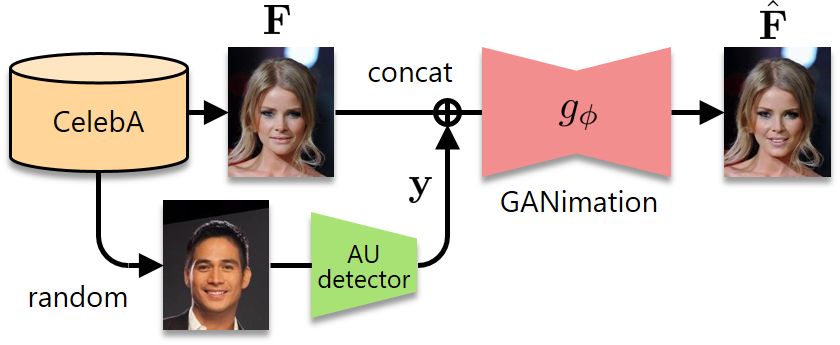

GANimation is a GAN-based image-to-image translation method that makes it possible to modify the facial expression in a still face image without changing the person identity by using AUs as conditioning variables.

Synthesized animation examples

Our facial animation synthesis system produces a facial animation from speech and a single image. For comparison, examples of synthesized animations for different architectures (frame-independent fully-connected network (i.e., multilayer perceptron; MLP), recurrent network (RNN), regular convolutional network (CNN), and dilated convolutional network (DCNN), respectively) of the CAUSE network are provided. Examples are also given for a version that uses the emotional state distribution vectors extracted by CME instead of the AU intensity vectors. In accordance with this change, in this version, GANimation was trained using the emotional state distribution vectors as the conditioning variables instead. For generating these examples, we used the development set in the VoxCeleb2 dataset [6] for training the CAUSE network and the student network in CME, and the first 162770 images in the CelebA dataset [7] for training GANimation. We also used the FERPlus dataset [8] for training the teacher nework in CME. Note that the left and right regions of each video correspond to the input image and generated animation, respectively. If you cannot hear the sound when playing the video, please use another browser such as Google Chrome, Mozilla Firefox, and Microsoft Edge.

|

Change speech (current: ) |

||||

|---|---|---|---|---|

|

Change image (current: #) |

CAUSE | CNN | ||

| DCNN | ||||

| RNN | ||||

| MLP | ||||

| CME | CNN | |||

Links to related pages

Please also refer to the following web sites.

References

[1] P. Ekman and W. V. Friesen, Facial action coding system: a technique for the measurement of facial movement, Consulting Psychologists Press, Palo Alto, CA, USA, 1978.

[2] S. Albanie, A. Nagrani, A. Vedaldi, and A. Zisserman, "Emotion recognition in speech using cross-modal transfer in the wild," in Proc. ACM Multimedia, 2018.

[3] A. Pumarola, A. Agudo, A. M. Martinez, A. Sanfeliu, and F. Moreno-Noguer, "GANimation: Anatomically-aware facial animation from a single image," in Proc. ECCV, 2018.

[4] I. Goodfellow, J. Pouget-Abadie, M. Mirza, B. Xu, D. Warde-Farley, S. Ozair, A. Courville, and Y. Bengio, "Generative adversarial nets," in Adv. NIPS, pp. 2672–2680, 2014.

[5] M. Arjovsky, S. Chintala, and L. Bottou, "Wasserstein generative adversarial networks," in Proc. ICML, pp. 214–223, 2017.

[6] J. S. Chung, A. Nagrani, and A. Zisserman, "VoxCeleb2: Deep speaker recognition," in Proc. Interspeech, 2015.

[7] Z. Liu, P. Luo, X. Wang, and X. Tang, "Deep learning face attributes in the wild," in Proc. ICCV, 2015.

[8] E. Barsoum, C. Zhang, C. C. Ferrer, and Z. Zhang, "Training deep networks for facial expression recognition with crowd-sourced label distribution," in Proc. ICMI, 2016.