Paper

Takuhiro Kaneko, Kaoru Hiramatsu, and Kunio Kashino,

Generative Attribute Controller with Conditional Filtered Generative Adversarial Networks.

IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2017.

[Paper]

[Supplemental]

[BibTex]

Abstract

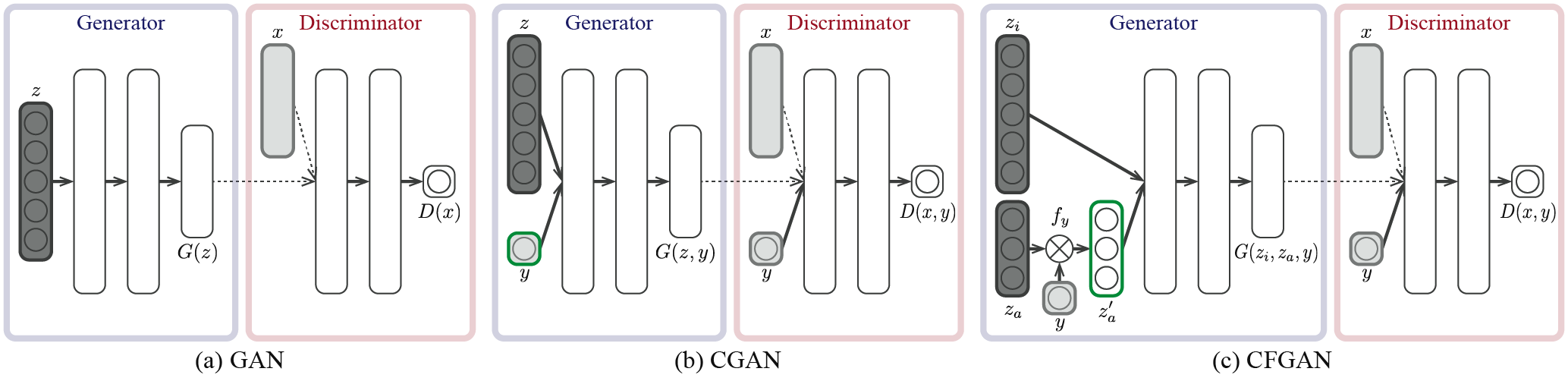

We present a generative attribute controller (GAC), a novel functionality for generating or editing an image while intuitively controlling large variations of an attribute. This controller is based on a novel generative model called the conditional filtered generative adversarial network (CFGAN), which is an extension of the conventional conditional GAN (CGAN) that incorporates a filtering architecture into the generator input. Unlike the conventional CGAN, which represents an attribute directly using an observable variable (e.g., the binary indicator of attribute presence) so its controllability is restricted to attribute labeling (e.g., restricted to an ON or OFF control), the CFGAN has a filtering architecture that associates an attribute with a multi-dimensional latent variable, enabling latent variations of the attribute to be represented. We also define the filtering architecture and training scheme considering controllability, enabling the variations of the attribute to be intuitively controlled using typical controllers (radio buttons and slide bars). We evaluated our CFGAN on MNIST, CUB, and CelebA datasets and show that it enables large variations of an attribute to be not only represented but also intuitively controlled while retaining identity. We also show that the learned latent space has enough expressive power to conduct attribute transfer and attribute-based image retrieval.

What is CFGAN?

This figure shows differences in network architectures between GAN [1], conditional GAN (CGAN) [2], and conditional filtered GAN (CFGAN). Dark gray indicates latent variables, while light gray indicates observable variables. Variables surrounded with green lines can be used to control an attribute. (a) In GAN, an attribute is not explicitly represented, so its generator cannot be controlled on it. (b) In CGAN, an attribute is represented using observable attribute labeling \( y \), e.g., the binary indicator of attribute presence, so its controllability is restricted to attribute labeling, e.g., restricted to an ON or OFF control. (c) In CFGAN, a filtering architecture \( f_y \) is incorporated into the generator input. This filtering architecture associates attribute labeling \( y \) with multi-dimensional latent variable \( z_a' \), so its generator can be controlled multi-dimensionally, i.e., more expressively. We can control in various ways by carefully designing filtering architectures. In our paper, we define three filtering architectures, enabling variations of an attribute to be intuitively controlled using typical GUI controllers, i.e., radio buttons and slide bars.

Demonstrations

References

[1] Ian Goodfellow, Jean Pouget-Abadie, Mehdi Mirza, Bing Xu, David Warde-Farley, Sherjil Ozair, Aaron Courville, and Yoshua Bengio, Generative Adversarial Nets. Advances in Neural Information Processing Systems (NIPS), 2014.

[2] Mehdi Mirza and Simon Osindero, Conditional Generative Adversarial Nets. arXiv, 2014.

Contact

Takuhiro Kaneko

NTT Communication Science Laboratories, NTT Corporation

takuhiro.kaneko.tb at hco.ntt.co.jp