Katsuhiko Ishiguro, Ph. D. - research

Fields & Interests

Machine Learning and Statistical Models

Statistical models for representing and analyzing complex real-world data.

Recently I'm working on nonparametric Bayesian models for time-series data and relational data

- Nonparametric Bayes models, probabilistic models

- Multi-variate analysis and kernel techniques

- Time series analysis

Multi-modal information processing

Auditory and visual information processing with

statistical pattern recognition techniques.

Including surveillance camera movies and audio recordings of uncontrolled scenes with aforementioned Bayesian statistical models.

- Multi-target tracking for video data

- Feature-based image recognition

- Speech / sound recognition and modeling

Artificial intelligence and computational cognitive robotics

How to build a robot / a machine agent who can communicate with humans, like as humans:

this is my core interest for my research activities.

I've been studying literature in robotics, cognitive sciences and artificial intelligence,

and is fascinated by the idea of "understanding by building" in cognitive robotics

to solve the following fundamental questions.

- Development of communication capacity of / with humans

- Concept and language acquisition through multi-modal information interaction

- Intelligent and efficient information processing skills for massive and complicated real-word data

Topics

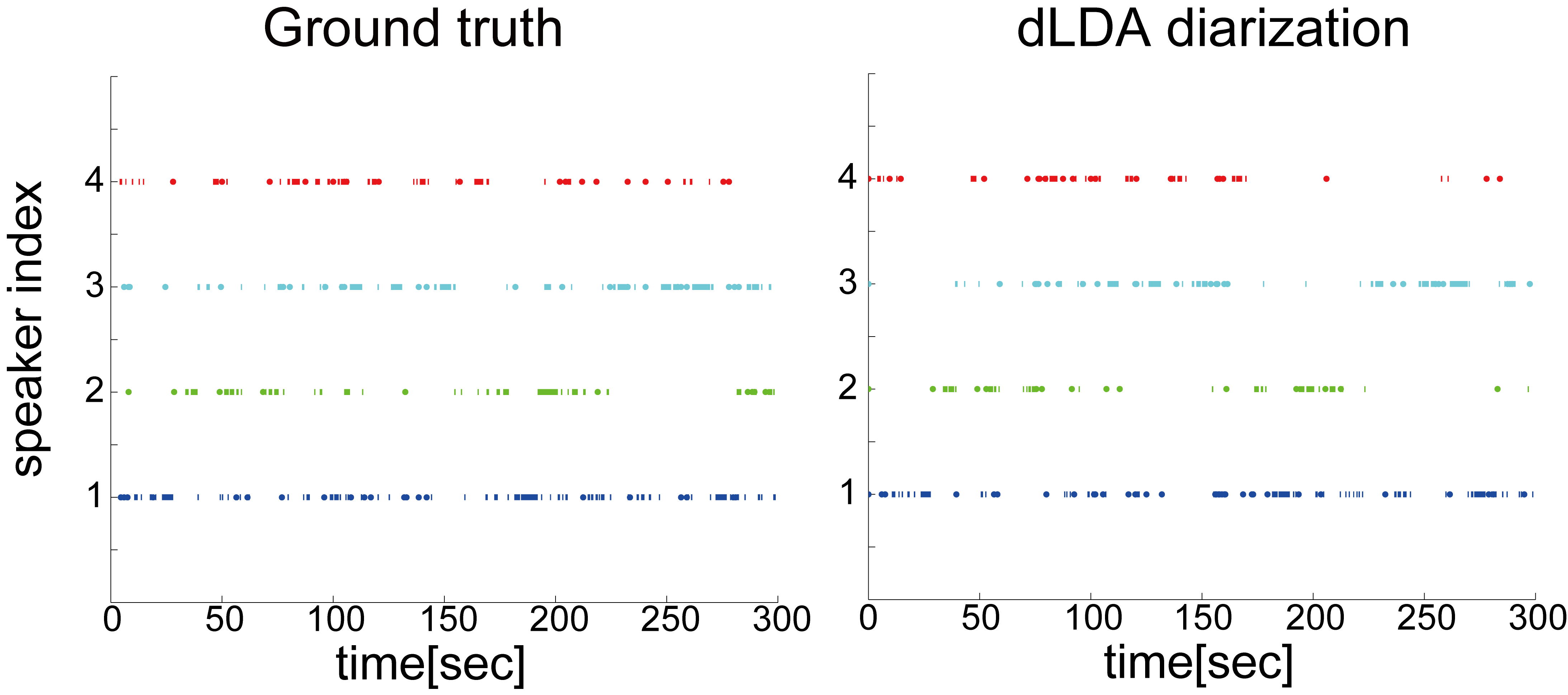

Fully Bayesian speaker diarization model

Speaker diarization is a relatively new topic in the audio processing society,

estimating "who spoke when" of the given conversation recordings automatically.

This technique is useful for the on-site auto-annotation of minutes,

speech signal enhancements and human-computer interfaces in the "wild" environments.

Speaker diarization is a relatively new topic in the audio processing society,

estimating "who spoke when" of the given conversation recordings automatically.

This technique is useful for the on-site auto-annotation of minutes,

speech signal enhancements and human-computer interfaces in the "wild" environments.

One of the key problems for speaker diarization is

to estimate the number and the locations of the speakers in the conversation.

In this research, we introduce a new model called dynamic Latent Dirichlet Allocation (dLDA for short)

for speaker diarization.

Our model is inspired by the observations on speaker "turn-takings" during conversations,

and is fully probabilistic in contrast to the previous works were based on heuristics.

We developed a fast iterative inference algorithm based on Variational Bayes which

enables us to obtain good estimates.

For details, please consult the following paper:

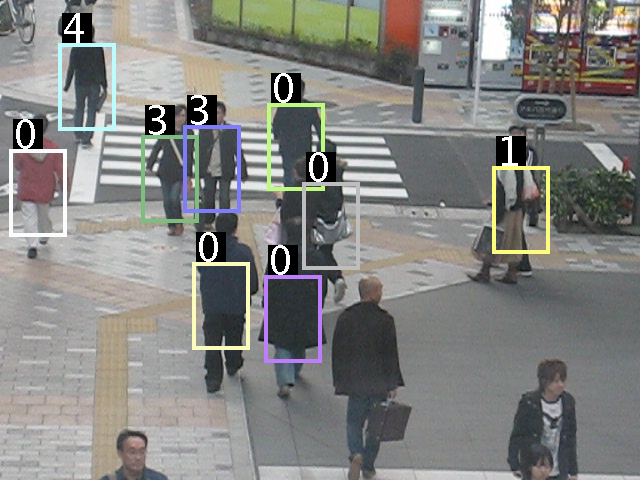

Tracking multiple targets while estimating the targets' dynamics

Multi-target tracking in movie scenes becomes one of the most promising technique in computer vision.

Most tracking algorithms require specifying the model of target movements in advance.

Multi-target tracking in movie scenes becomes one of the most promising technique in computer vision.

Most tracking algorithms require specifying the model of target movements in advance.

In this research, we developed a fully Bayesian model for simultaneous tracking and dynamics learning of multiple targets.

We adopted a nonparametric Bayes model, known as Dirichlet Process Mixture, which is able to aggregate movements in the sceness

into a few number of patterns by combined with the mixture of Kalman filters.

The number of targets are time-varying, and this change is also modeled as a stochastic process.

We derived a composite model of existing tracking models,

and achieved an efficient inference algorithm based on Particle filters.

For details, please consult the following paper:

Detecting causal relationship between bivariate time series

Human kind can infer, or hypothesize the causal relationship between

two events observed successively in time axis.

In the field of nonlinear physics and financial time series analysis,

such detection of causal relationship between multiple time series is

extensively studied.

In this train of researches, we focus on developing the technique for

causal relationship discovery from bivariate time series analysis.

We reported the extensive comparison results of several Granger causality-related techniques.

Also invented the Causality Marker technique,

which is novel in detecting the temporal and asymmetric causal connectivities among the sources.

For details, please consult the following papers:

Real-time high-performance motion recognition with cluster computers

Cubic Higher-order Local Auto Correlation (CHLAC) feature, which is an extension of

well-known autocorrelation, extracts spacio-temporal characteristics of time series data.

Several authors reported CHLAC feature gives good results in motion recognition tasks from

movie data.

In this research, we built a real-time motion recognition system using CHLAC features.

A video camera captures movies of human actions.

CHLAC features are calculated from those movies, and the recognizer classify the stream of

feature vectors to one of the learned human motions.

Those elements are implemented on cluster computing environments,

which enables the real-time motion recognition system.

For details, please consult the following paper: