Papers

- Hirokazu Kameoka, Kou Tanaka, Aaron Valero Puche, Yasunori Ohishi, Takuhiro Kaneko, "Crossmodal Voice Conversion," arXiv:1904.04540 [cs.SD], Apr. 2019. (PDF)

Crossmodal Voice Conversion

Humans are able to imagine a person's voice from the person's appearance and imagine the person's appearance from his/her voice. This may indicate the possibility of being a certain correlation between voices and appearance, as discussed in [1]. Here, an interesting question is whether it is technically possible to predict the voice of a person only from an image of his/her face and predict a person’s face only from his/her voice. Our method aims to convert speech into a voice that matches an input face image and generate a face image that matches the voice of the input speech by leveraging the underlying correlation between faces and voices.

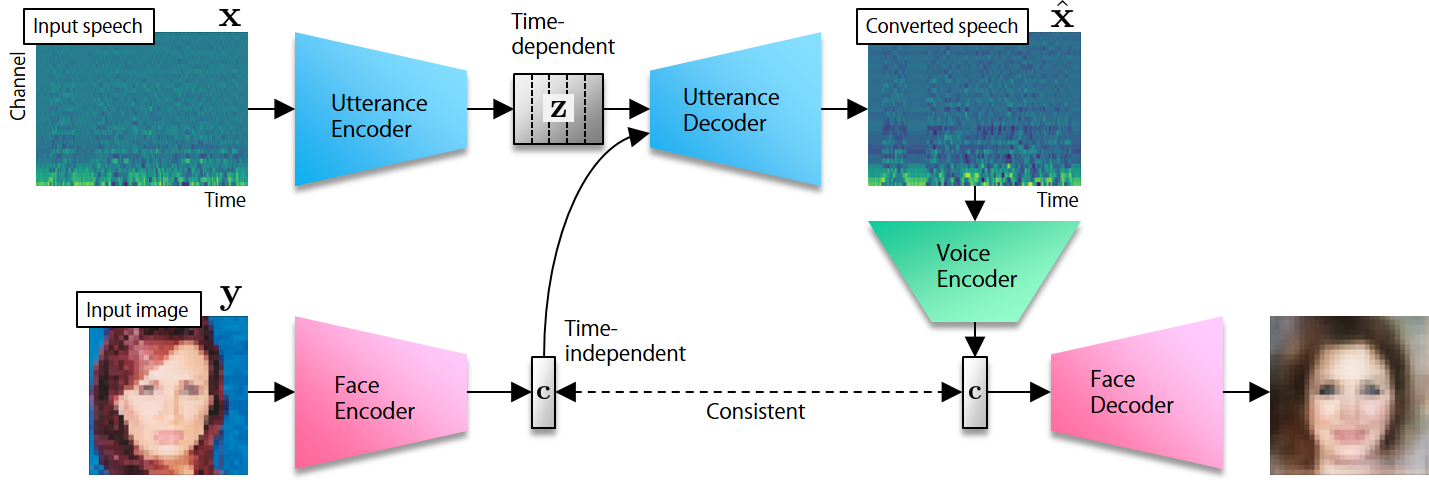

Model

We propose a model, consisting of an utterance encoder/decoder, a face encoder/decoder and a voice encoder (Figure 1). We use the latent code of an input face image encoded by the face encoder as the auxiliary input into the utterance decoder and train the utterance encoder/decoder so that the original latent code can be recovered from the generated speech by the voice encoder. We also train the face decoder along with the face encoder to ensure that the latent code will contain sufficient information to reconstruct the input face image. We confirmed experimentally that the utterance encoder/decoder and face encoder trained in this way were able to convert input speech into a voice that matched an input face image and that the voice encoder and face decoder were able to generate a face image that matched the voice of the input speech.

Links to related pages

Please also refer to the following web sites.

Speech converted using face images

Here, we show several examples of speech converted according to auxiliary face image inputs.

| Input speech | Input image | Converted speech | ||

|---|---|---|---|---|

| + |

|

→ | ||

| + |

|

→ | ||

| + |

|

→ |

Face images predicted from speech

Here, we show several examples of the face images predicted by the proposed method from female/male and young/aged speech.

| Input speech | Predicted face image | |

|---|---|---|

| → |

|

|

| → |

|

|

| → |

|

|

| → |

|

News/Media

References

[1] H. M. J. Smith, A. K. Dunn, T. Baguley, and P. C. Stacey, "Concordant cues in faces and voices: Testing the backup signal hypothesis," Evolutionary Psychology, vol. 14, no. 1, pp. 1–10, 2016.