A paper presented at ACM Multimedia 2022

ConceptBeam: Concept Driven Target Speech Extraction

We are pleased to announce that our paper "ConceptBeam: Concept Driven Target Speech Extraction" by Yasunori Ohishi, Marc Delcroix, Tsubasa Ochiai, Shoko Araki, Daiki Takeuchi, Daisuke Niizumi, Akisato Kimura, Noboru Harada, and Kunio Kashino has been accepted to ACM Multimedia 2022 (acceptance rate = 690/2473 = 27.9%).

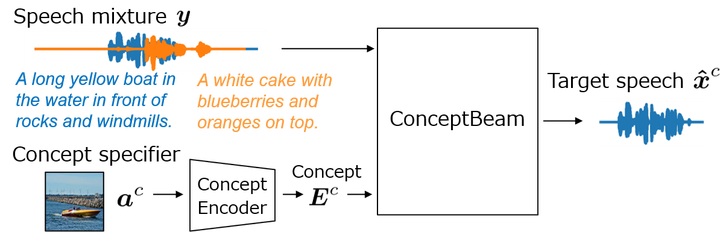

This paper proposes a novel framework for target speech extraction based on semantic information, called ConceptBeam. Target speech extraction means extracting the speech of a target speaker in a mixture of overlapping speakers. Typical approaches have been exploiting properties of audio signals, such as harmonic structure and direction of arrivals. In contrast, ConceptBeam tackles the problem with semantic clues. Specifically, we extract the speech of speakers speaking about a concept, i.e., a topic of interest, using a concept specifier such as an image and speech. Solving this novel problem would open the door to innovative applications such as listening, searching, and summarization systems that focus on a particular topic discussed in a conversation.