Science of Computation and Language

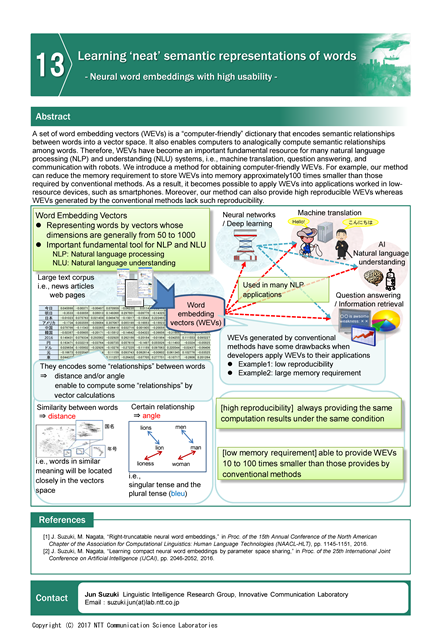

Learning ‘neat’ semantic representations of words

Neural word embeddings with high usability

Abstract

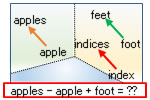

A set of word embedding vectors (WEVs) is a “computer-friendly” dictionary that encodes semantic relationships between words into a vector space. It also enables computers to analogically compute semantic relationships among words. Therefore, WEVs have become an important fundamental resource for many natural language processing (NLP) and understanding (NLU) systems, i.e., machine translation, question answering, and communication with robots. We introduce a method for obtaining computer-friendly WEVs. For example, our method can reduce the memory requirement to store WEVs into memory approximately 100 times smaller than those required by conventional methods. As a result, it becomes possible to apply WEVs into applications worked in low-resource devices, such as smartphones. Moreover, our method can also provide high reproducible WEVs whereas WEVs generated by the conventional methods lack such reproducibility.

Photos

Poster

Presenters

Jun Suzuki

Innovative Communication Laboratory

Innovative Communication Laboratory

Katsuhiko Hayashi

Innovative Communication Laboratory

Innovative Communication Laboratory

Sho Takase

Innovative Communication Laboratory

Innovative Communication Laboratory