Science of Media Information

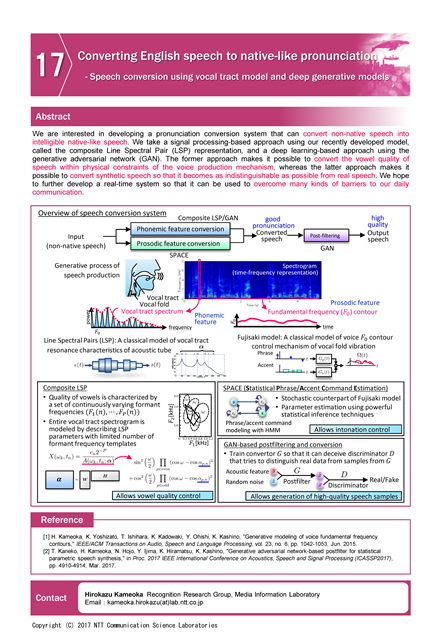

Converting English speech to native-like pronunciation

Speech conversion using vocal tract model and deep generative models

Abstract

We are interested in developing a pronunciation conversion system that can convert non-native speech into intelligible native-like speech. We take a signal processing-based approach using our recently developed model, called the composite Line Spectral Pair (LSP) representation, and a deep learning-based approach using the generative adversarial network (GAN). The former approach makes it possible to convert the vowel quality of speech within physical constraints of the voice production mechanism, whereas the latter approach makes it possible to convert synthetic speech so that it becomes as indistinguishable as possible from real speech. We hope to further develop a real-time system so that it can be used to overcome many kinds of barriers to our daily communication.

Photos

Poster

Presenters

Takuhiro Kaneko

Media Information Laboratory

Media Information Laboratory

Chihiro Watanabe

Media Information Laboratory

Media Information Laboratory

Shigemi Aoyagi

Media Information Laboratory

Media Information Laboratory

Ko Tanaka

Media Information Laboratory

Media Information Laboratory