Exhibition Program

Science of Machine Learning

04

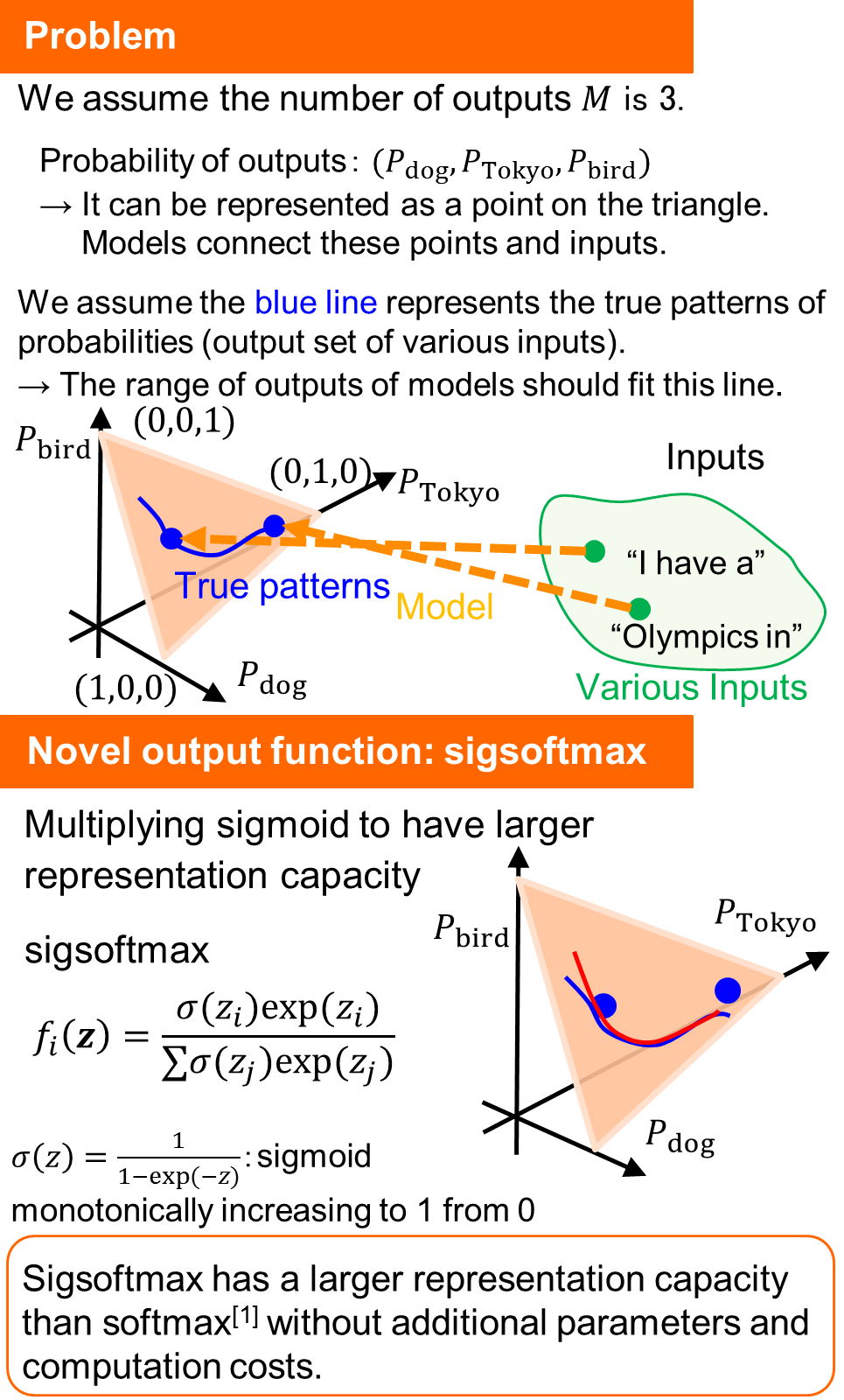

Improving the accuracy of deep learning

- Larger capacity output function for deep learning -

Abstract

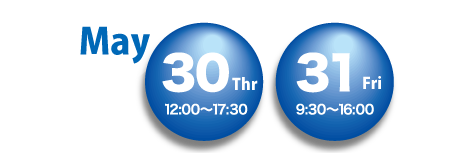

Deep learning is used in a lot of applications, e.g., image recognition, speech recognition, and machine

translation. In many applications of deep learning, softmax is used as an output activation function for modeling

categorical probability distributions. To represent various probabilities, models should output various patterns, i.e.,

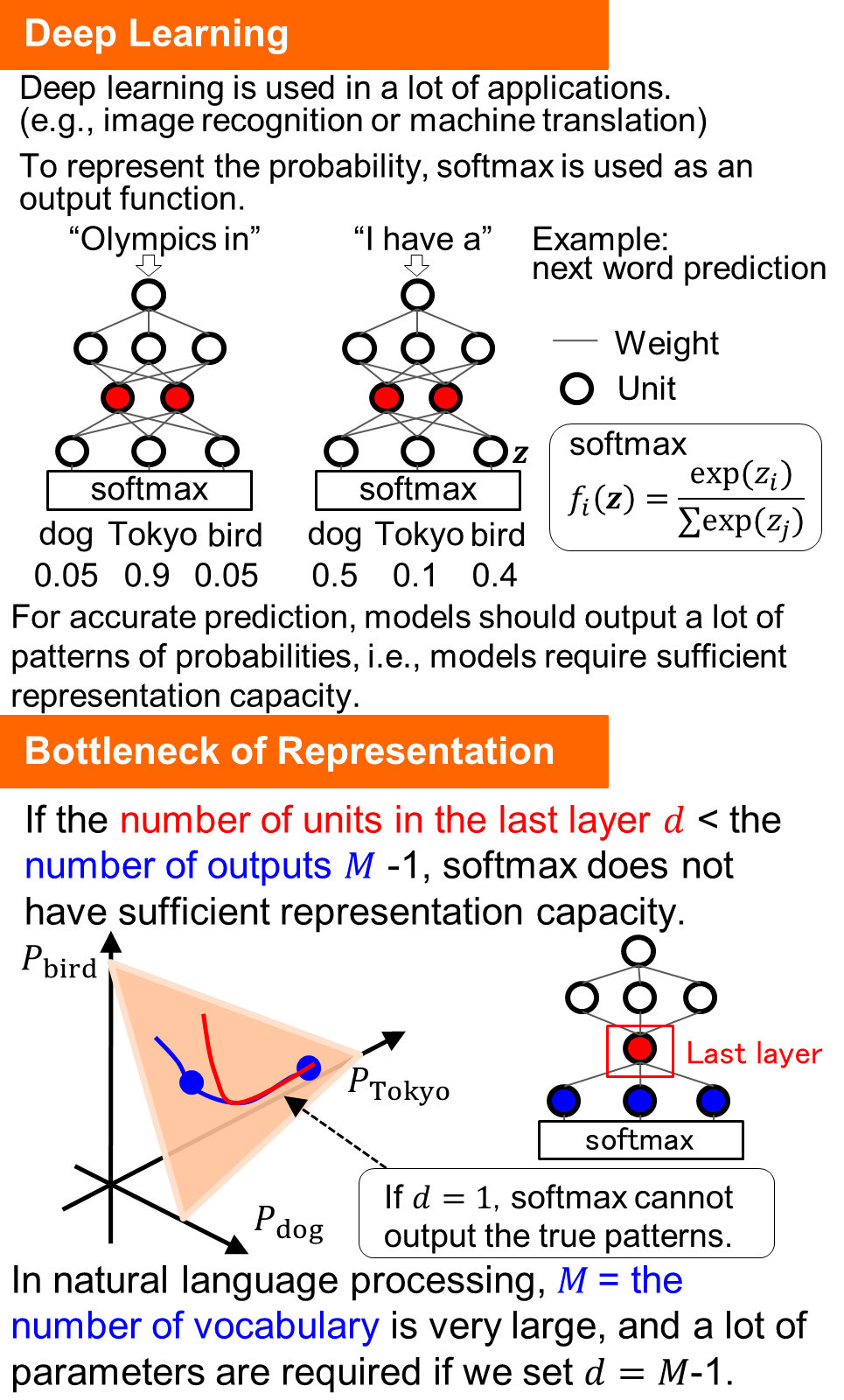

models should have sufficient representation capacity. However, softmax can be a bottleneck of representational

capacity (the softmax bottleneck) under a certain condition. In order to break the softmax bottleneck, we propose

a novel output activation function: sigsoftmax. To break the softmax bottleneck, sigsoftmax is composed of

sigmoid and exponential functions. Sigsoftmax can output more various patterns than softmax without additional

parameters and additional computation costs. As a result, the model with sigsoftmax can be more accurate than

that with softmax.

References

- [1] S. Kanai, Y. Fujiwara, Y. Yamanaka, S. Adachi, “Sigsoftmax: Reanalysis of the softmax bottleneck,” in Proc. 32nd Conference on Neural Information Processing Systems (NeurIPS), 2018.

Poster

Photos

Contact

Sekitoshi Kanai, NTT Software Innovation Center

Email:

Email: