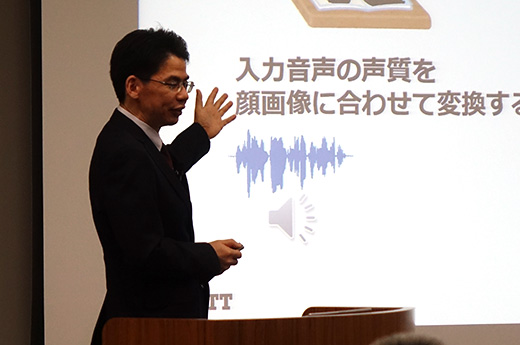

Research Talk

May 31st (Fri) 11:00 - 11:40

See, Hear, and Learn to Describe

- Crossmodal information processing opens the way to smarter AI -

Kunio Kashino, Media Information Laboratory

Abstract

Recent advances in AI and machine learning research are breaking down the barriers between modalities such as language, sounds, and images, which have been studied separately so far. There are various implications for this dramatic change. Most importantly, AI is now achieving a new breakthrough - its ability to learn "concepts" on its own based on multimodal inputs. The key is to acquire, analyze and utilize common representations that can be shared among those multiple modalities. We call this approach crossmodal information processing. This talk will introduce its concept and discuss how it will help us in our future lives.

Photos

Speaker

Kunio Kashino,

Media Information Laboratory

Media Information Laboratory