| 02 |

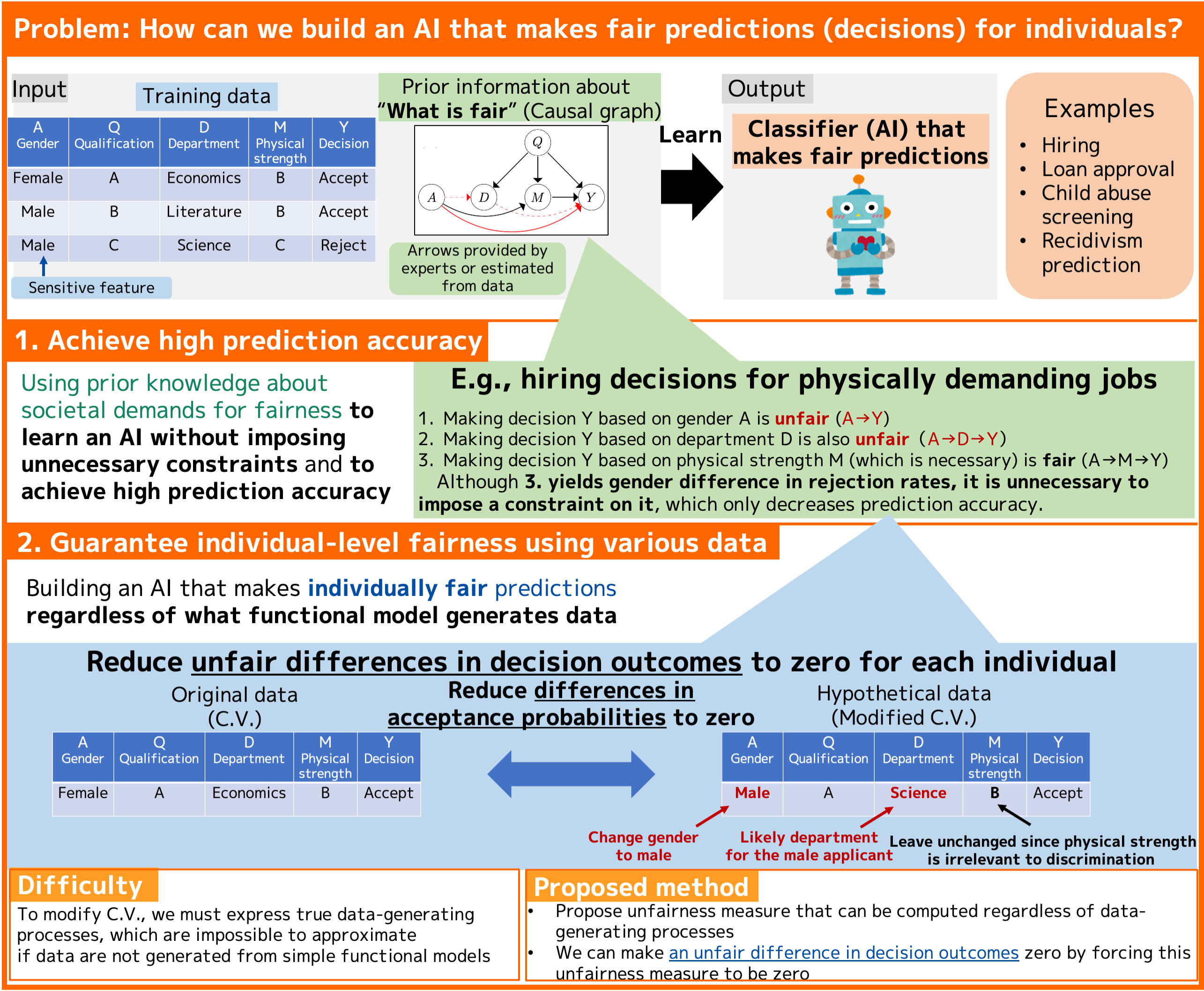

Ask me how to make a fair decision for everyoneLearning individually fair classifier based on causality

|

|---|

Machine learning predictions are increasingly used to make critical decisions that severely affect people’s lives, including loan approvals, hiring, and recidivism prediction. For this purpose, we developed a novel machine learning technology that makes predictions that are accurate and fair with respect to sensitive features such as gender, race, religion, and sexual orientation. To achieve high prediction accuracy, we utilize prior information about societal demands for each decision-making scenario, e.g., “rejecting applicants based on physical strength is fair if the job requires physical strength.” Although existing methods cannot ensure fairness when the data are not generated by a restricted class of functions, our proposed method can use various data to guarantee fairness. Thus, admitting that “what is fair” depends on a particular sense of societal values, we create innovative machine learning technologies that can more flexibly respond to societal demands by bridging the gap between technical limitations and societal needs. In this way, we hope to mold a society that can make automatic decisions while ensuring that nobody will suffer detrimental treatment.

[1] Y. Chikahara, S. Sakaue, A. Fujino, H. Kashima, “Learning Individually Fair Classifier with Path-Specific Causal-Effect Constraint,” in Proc. the 24-th International Conference on Artificial Intelligence and Statistics (AISTATS), 2021.

Yoichi Chikahara / Learning and Intelligent Systems Research Group, Innovative Communication Laboratory

Email: cs-openhouse-ml@hco.ntt.co.jp