Exhibition Program

Science of Media Information

22

Listening carefully to your heart beat

Cardiohemodynamical analysis based on stethoscopic sounds

Abstract

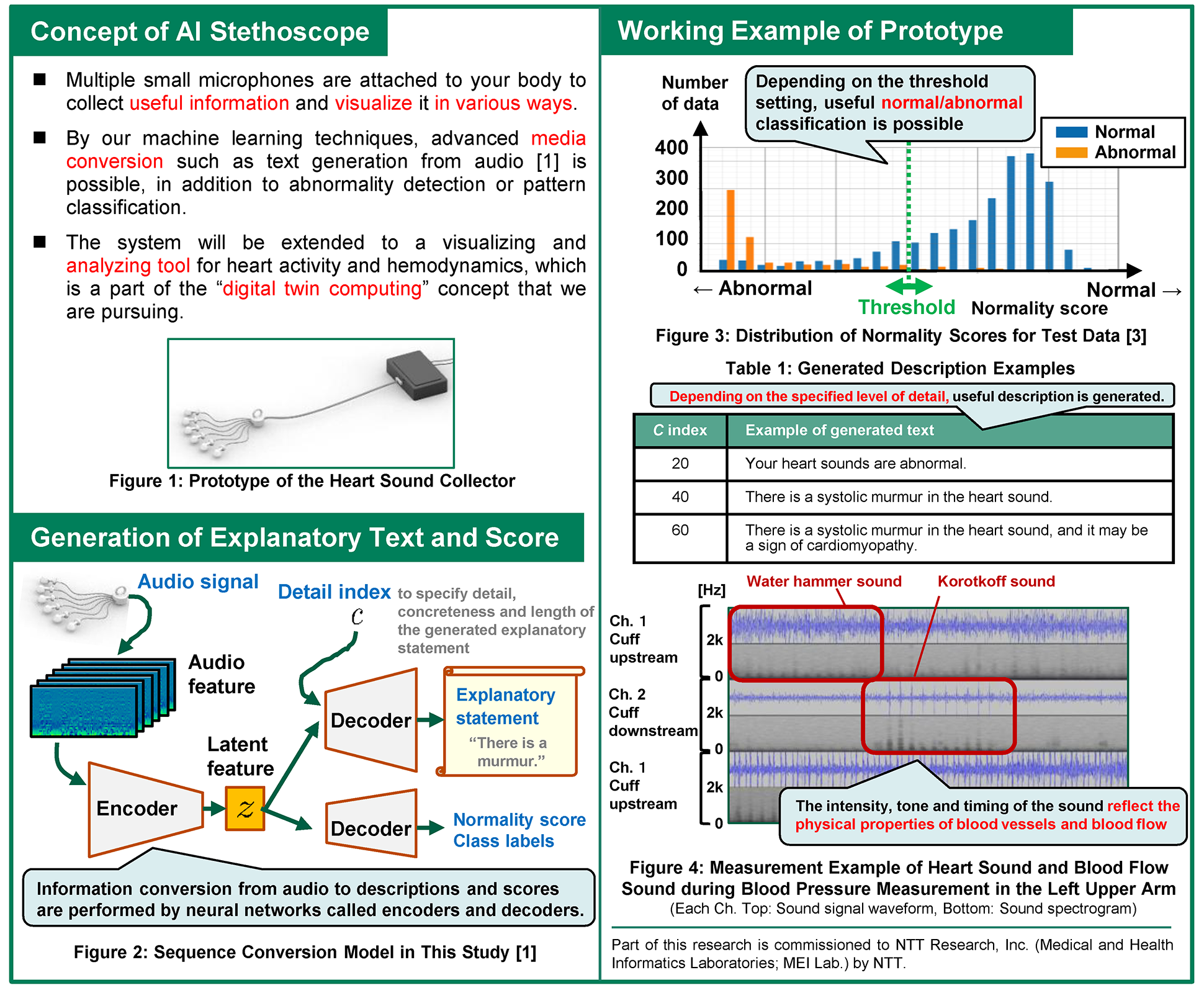

A variety of sounds are constantly emitted from the human body as a result of life activities. By listening to and analyzing those sounds, we can obtain useful information about the function and condition of the body, which is called auscultation. In this research, we are focusing on heart sounds to estimate the function and condition of the heart and blood vessels based on the observation of acoustic signals. In our system, multiple microphones are attached to several places, such as the chest, to detect heart activity. Based on the captured sound, it estimates the degree of normality as a score and generates an explanatory statement as a sentence. We have confirmed that the normality estimation and description generation with a specified degree of detail work effectively for test data. We aim to realize an "AI stethoscope" that contributes to the prevention and early detection of diseases in many people, as skilled doctors can accurately understand and explain the condition through auscultation.

References

- S. Ikawa, K. Kashino, “Neural audio captioning based on conditional sequence-to-sequence model,” In Proc. DCASE 2019 Workshop, 2019.

- M. Nakano, R. Shibue, K. Kashino, S. Tsukada, H. Tomoike, “Gaussian process with physical laws for 3D cardiac modeling,” under review.

- The PhysioNet Computing in Cardiology Challenge, https://physionet.org/content/challenge-2016/1.0.0/, 2016.

Poster

Contact

Kunio Kashino / Media Information Laboratory and Biomedical Informatics Research Center

Email:

Email: