| 18 |

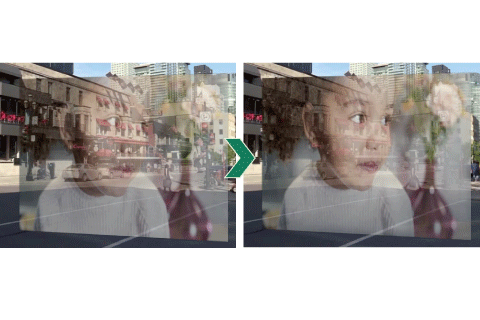

Maintain comfortable visibility anytime, anywhereImage blending with content-adaptive visibility predictor

|

|---|

The visibility of an image semi-transparently overlaid on another image significantly varies depending on the content of images. This makes it difficult to maintain desired visibility when image content changes. To tackle this problem, we developed a perceptual model to predict the visibility of arbitrarily combined blended images. Specifically, we clarified that the influence of each feature on the overall visibility depends on the distribution of features in the presented content, such as fineness and colors. Using the perceptual model that incorporates this effect, we achieved better control on the visibility of blended images than existing techniques. As AR technology matures, there will be more and more situations where information is displayed semi-transparently across our entire visual field. Our technique will make it possible to maintain a comfortable visibility level for such information. It also enables more intuitive control of visibility when blending images with a video editing software.

[1] T. Fukiage, T. Oishi, “A computational model to predict the visibility of alpha-blended images,” Vision Sciences Society Annual Meeting 2021 (Abstract published in: Journal of Vision, Vol. 21, No. 2493).

[2] T. Fukiage, T. Oishi, “Perception-based image blending based on content-adaptive visibility predictor,” in Proc. Special Interest Group on Computer Vision and Image Media (CVIM), Vol. 229, No. 45, pp. 1–8, 2022 (in Japanese).

Taiki Fukiage / Sensory Representation Research Group, Human and Information Science Laboratory

Email: cs-openhouse-ml@hco.ntt.co.jp