| 19 |

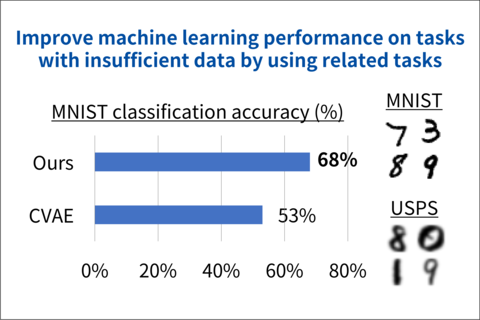

Representation learning for insufficient training dataLearning priors for multi-task representation learning

|

|---|

Representation learning, which obtains effective features and patterns from data, is widely used in machine learning. However, it does NOT perform well with insufficient training data. To solve this problem, we focus on the prior knowledge used in representation learning. We reveal that the commonly used simple prior knowledge is one cause of performance degradation and propose a new prior knowledge that is learned by using data from the related tasks. Experiments show that even with only a few hundred data points, representation learning with our prior knowledge improves machine learning performance by up to 15%. We will apply this approach to real-world anomaly detection problems with insufficient data, such as new cars or factories under development.

[1] H. Takahashi, T. Iwata, A. Kumagai, S. Kanai, M. Yamada, Y. Yamanaka, H. Kashima, “Learning Optimal Priors for Task-Invariant Representations in Variational Autoencoders,” in Proceedings of the 28th ACM SIGKDD Conference on Knowledge Discovery and Data Mining, 2022.

Hiroshi Takahashi, Learning and Intelligent Systems Research Group, Innovative Communication Laboratory