| 15 |

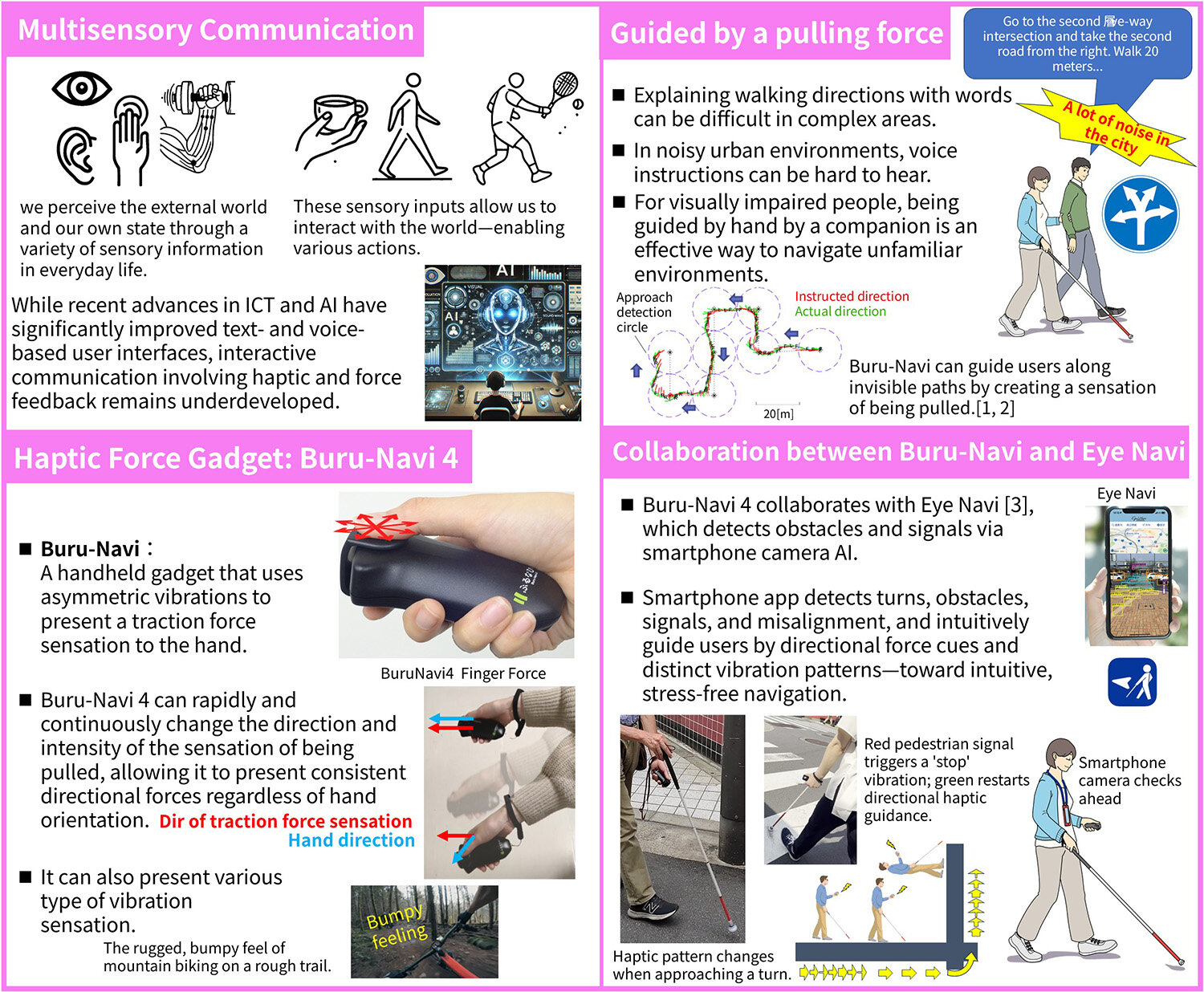

Let me lead you through the city, empowered by AIToward a real-world deployment of tactile gadget Buru-Navi4

|

|---|

What if you could be guided not just by words or maps, but by the sensation of being pulled in the right direction? We are exploring a new form of multi-sensory communication that helps people with visual impairments - or those unfamiliar with their surroundings - to navigate intuitively through somatosensation. By combining the Buru-Navi 4 compact haptic device with Eye Navi AI-powered environmental recognition via smartphone camera, we have developed a real-world pedestrian navigation system that guides users to their destination with a tactile pull. This breakthrough is a major step towards a more inclusive future where the world's 45 million visually impaired people - and anyone who needs navigation assistance - can move freely around the city. Through the fusion of traction force sensation and camera AI, we aim to break down the barriers to mobility and pave the way for a society where everyone can walk with independence and ease.

[1] H. Gomi, S. Ito, R. Tanase, “Innovative mobile force display: Buru-Navi,” in Proc. The 26th International Display Workshops, pp. 962–965, 2019.

[2] Sight World, https://www.sight-world.com/

[3] Eye Navi, Computer Science Institute Co., Ltd., https://www.eyenavi.jp/

Hiroaki Gomi, Sensory And Motor Research Group, Human Information Science Laboratory