Science of Human

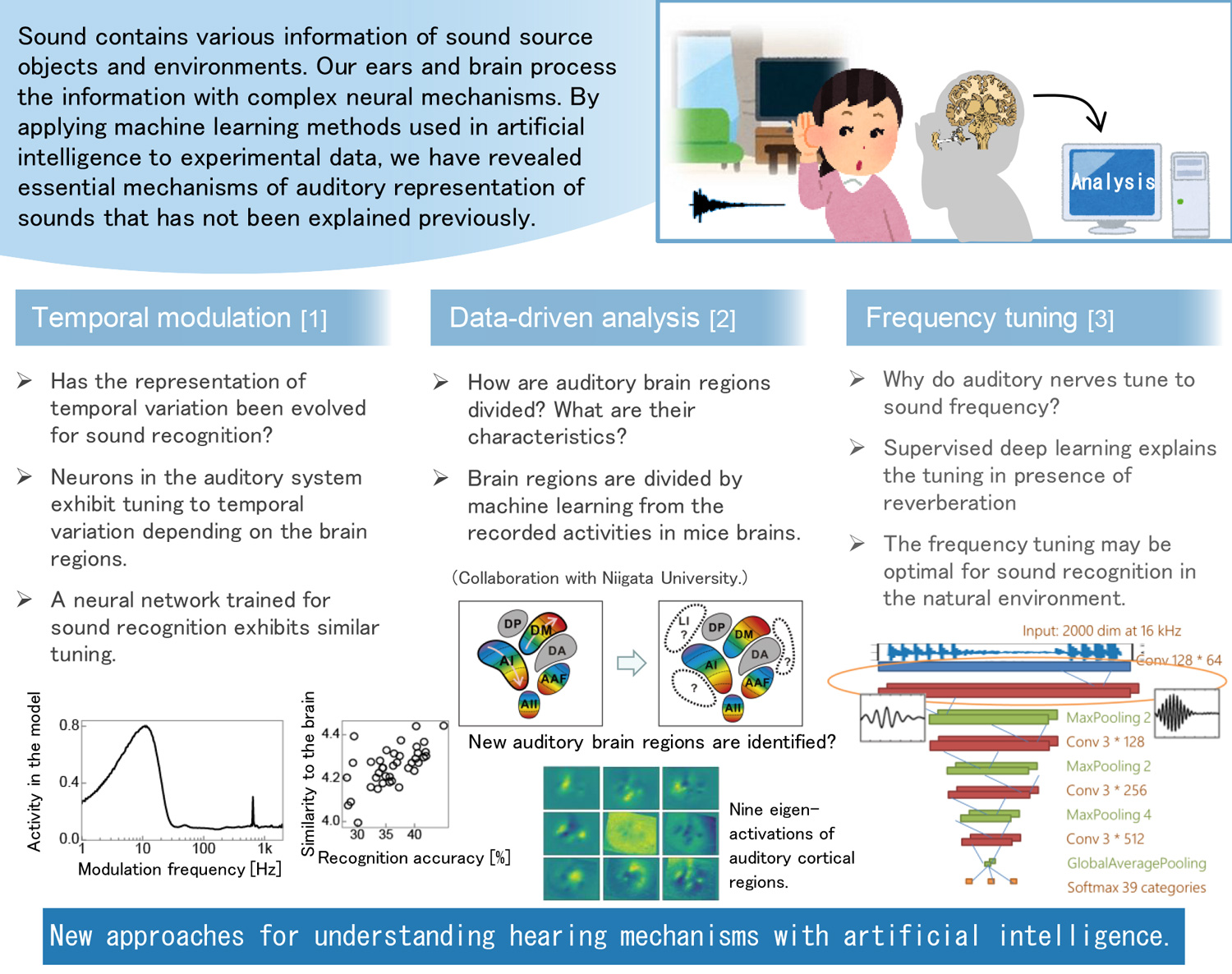

Understanding human hearing with AI

Analyzing auditory neural mechanisms with machine learning

Abstract

In our daily life, we encounter sounds with highly complex structure, which are processed by our ears and brain containing a network of numerous neurons. We are trying to understand the complex relationship between sound and the ear and the brain with a help of machine learning technique based on which current “artificial intelligence” is built. In typical cognitive neuroscience studies with a hypothesis-testing paradigm, research outcomes are highly dependent on pre-designed hypothesis. We employed machine learning techniques for extracting relationships in the complex data, and are gaining insights of auditory mechanisms without relying on strong hypothesis. Such a paradigm may enable us to find and propose new hypotheses that have been overlooked by past researches and lead us to true understanding of neural mechanisms. Also, such techniques may be helpful for designing sounds taking individual hearing abilities into consideration including elders and people with hearing deficits.

Reference

- [1] T. Koumura, H. Terashima, S. Furukawa, “Representation of amplitude modulation in a deep neural network optimized for sound classification,” in Proc. 41st Annual Midwinter Meeting of the Association for Research in Otolaryngology, 2018.

[2] H. Terashima, H. Tsukano, S. Furukawa, “An attempt to analyze brain structure in mice using natural sound stimuli,” in Proc. Winter Workshop of Mechanism of Brain and Mind, 2012.

[3] H. Terashima, S. Furukawa, “Reconsidering the efficient coding model of the auditory periphery under reverberations,” in Proc. 41st Annual Midwinter Meeting of the Association for Research in Otolaryngology, 2018.

Poster

Photos

Presenters

Takuya Koumura

Human Information Science Laboratory

Human Information Science Laboratory