| 12 |

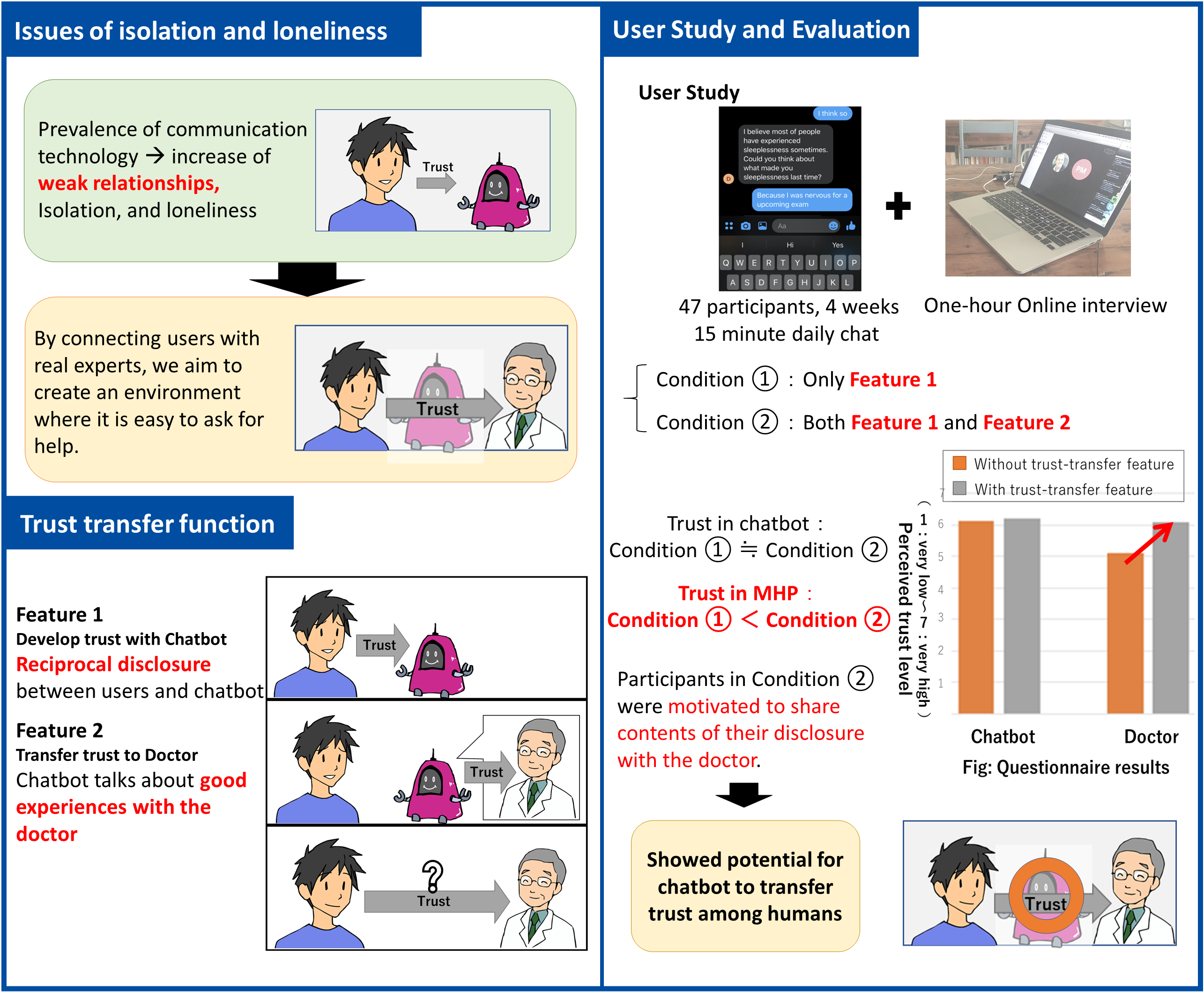

Can a chatbot mediate trust between humans?Bridging doctor-patient rapport through a chatbot

|

|---|

For mental health professionals (MHP) to understand patients’ mental health, it is critical that patients engage in deep self-disclosure. However, people tend to avoid revealing their vulnerabilities for fear of being judged by others. Chatbots show great potential in this domain because prior research has shown that people tend to disclose symptoms of depression more truthfully when talking to a chatbot than when talking to a human interviewer. Our work extends this prior work by proposing a novel approach to facilitate people's self-disclosure to MHPs through chatbots. We designed, implemented and evaluated a chatbot that elicits deep self-disclosure and promotes trust-building between users and the MHPs. Results show that people were more willing to share their self-disclosure content with MHPs through the chatbot, which suggests the promise of our approach.

[1] Y. Lee, N. Yamashita, Y. Huang, “Designing a Chatbot as a Mediator for Promoting Deep Self-Disclosure to a Real Mental Health Professional,” in Proc. ACM Hum.-Comput. Interact. 4, CSCW1, Article 031, (CSCW'20), ACM, pp. 1-27, 2020.

[2] Y. Lee, N. Yamashita, W. Fu, Y. Huang, ““I Hear You, I Feel You”: Encouraging Deep Self-disclosure through a Chatbot,” in Proc. the 2020 CHI Conference on Human Factors in Computing Systems (CHI '20), ACM, pp. 1–12, 2020.

Naomi Yamashita / Interaction Research Group, Innovative Communication Laboratory

Email: cs-openhouse-ml@hco.ntt.co.jp